A multidisciplinary team of engineers and clinicians at Vanderbilt University Medical Center has advanced its work to develop new tools for intraoperative imaging during ophthalmic surgery.

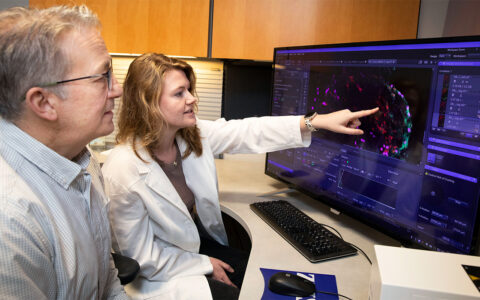

The team recently presented a novel, automated instrument tracking method that leverages multimodal imaging and deep learning to dynamically detect surgical instrument positions and re-center imaging fields for 4D video-rate visualization of ophthalmic surgical maneuvers.

“Our collaborative grants include 3D imaging combined with microscopy to allow visualizing of surgical maneuvers and deformations caused by surgical techniques, and to use those images to better understand how postoperative outcomes result from intraoperative decision-making,” said principal investigator Yuankai (Kenny) Tao, Ph.D., an assistant professor of biomedical engineering at Vanderbilt.

Tao’s lab previously developed 4D intraoperative spectrally encoded coherence tomography and reflectometry (iSECTR) technology that allows for simultaneous, intrinsically co-registered and cross-sectional OCT imaging.

“Until now, the imaging field has been static,” he said. “This new technology takes it to the next level.”

Tao leads a team of engineers and clinicians that includes Vanderbilt biomedical engineer Eric Tang, Ph.D., a postdoctoral scholar in biomedical engineering, and ophthalmologist Shriji Patel, M.D., of the Vanderbilt Eye Institute. The researchers collaborate through the Vanderbilt Institute for Surgery and Engineering.

Tang developed the tracking technology that locates the surgical instrument and guides it with OCT.

“As the instrument is moving, we can detect its position with OCT, automatically track it, and provide 3D imaging that is tracking it over time, compared to commercial systems that can only provide static 2D images,” he explained. “And because we’re recording, we can review the video post-surgery to see how well we performed.”

Challenges of Ophthalmic Imaging

When imaging 4D dynamics with video, Tao explained, the faster the imaging speed the lower the signal. In tissues outside the eye, surgeons can increase power during imaging, but inside the eye such an increase could blind the patient.

“We see this technology as a bridge between what we can image and what robotic surgery can do.”

Effectively, ophthalmic surgeons must image a smaller field to get the same resolution as surgeons viewing a larger area.

“The trade-off is really between speed and resolution. We can get a densely sampled 3D volume of the entire back of the eye, but that would take tens of seconds to a minute per acquisition,” Tang said. “At that speed, we can’t really visualize live dynamics of surgical interactions.

“It also limits us as to how much area we can survey. You might have a very large macular region with potentially expansive disease – as much as a 20-millimeter range – but what we can see robustly is 3-by-5 millimeters.”

Ophthalmic microsurgery presents additional challenges due to the relationship between instrument motion and deformation inside the eye, image field distortion, image artifacts, and motion from patient movement and physiologic tremor.

With the new technology, the team can achieve micron-level tracking accuracy at varying instrument orientations, as well as at extreme instrument speeds and image defocus.

Currently, the imaging system is being tested in ex vivo porcine eyes. The team is using mock surgeries to demonstrate its ability to track multiple instruments performing multiple surgical maneuvers: an anterior incision; forceps to pull on tissue; an injection into the anterior chamber.

“We want to incorporate multiple instruments used by ophthalmic surgeons,” Tang noted. “The first instrument we tested was a 25-gauge internal limiting membrane forceps and we hope to test other lances, knives, etc., used in anterior segment as well as instruments for posterior segment operations.”

Translating Technology to the Clinic

The next step is integrating the technology in the operating room.

“We’d like to obtain some patient data to see how it works in the ophthalmology setting,” Tang said. “The best thing about imaging technology is that it’s not invasive, so this is possible.”

Another avenue the team is exploring is how to best display this “moving” 4D image to the surgeon – whether on an external screen, a heads-up display on virtual reality, or augmented reality in virtual overlay of the surgical field. They are also looking at ways to display tissue deformation in real time.

“We see this as the stepping-stone to clinical translation – certainly that’s where our goals are, but there are still a lot of technological challenges that remain,” said Tao. “Longer term, we see this technology as a bridge between what we can image and what robotic surgery can do.”

“Our imaging resolution now far exceeds what retinal surgeons are seeing. Robotic surgery could enhance stability and precision and improve outcomes in these microsurgeries,” Tao added.